Most Apple Immersive Video has focused on the “real”, captured with stereoscopic rigs or the URSA Cine Immersive, often showcasing natural environments. But the future of the format isn’t just a mirror of our physical world; it’s also a canvas for our imagination. In this edition, we hear from two amazing artists who prove that synthetic worlds are the next big leap for the medium.

VJ WOOO is the creator of Nebula Junkyard, an ecstatic space-music video. Gryun Kim and VJ WOOO collaborated on WAI-000, a cinematic cyberpunk teaser that feels as bracing today as The Matrix did 30 years ago. We asked them to demystify the workflow of building worlds for the Apple Vision Pro.

Gryun, WAI-000 originally began as a 2D piece by Taehoon Park, VJ WOOO and yourself, which you and VJ WOOO later expanded into an immersive/VR version. But the original has quite a few “macro” shots, which is rare in this format. Were you concerned about how that would translate when objects are just inches from the viewer’s face?

I wanted to take a bold approach. One challenge was that the shot was initially designed with a noticeable tilt. In immersive space, a tilted camera makes the entire world feel naturally slanted, which is uncomfortable. Our solution was to scale up the environment and let the mold itself feel slightly tilted, rather than the whole world. In immersive media, scale itself becomes an expressive tool—we found that we could amplify emphasis in a way that flat images simply can’t.

Was there a moment when you realized an object was “too close”? Did it feel empowering to be able to reposition it instantly?

If an object is too close, the eyes are forced to converge unnaturally. We found that objects generally need to be at least about 15cm away from the viewer’s eyes to feel comfortable. But the real “hack” we used was scaling up the entire scene, rather than moving the camera closer. Because this was all CG, we had the freedom to experiment with staging solutions that would be impossible in the real world.

What was the biggest “No-No” you discovered when translating traditional cinematography into this 180-degree immersive format?

The most critical thing to avoid is dynamic camera motion without a clear visual focus point. In WAI-000, we have a tunnel sequence. In earlier versions, we noticed strong nausea reactions. By adding a stable, code-like text element in the center—a "visual anchor"—we gave viewers a way to stabilize their gaze. This dramatically reduced discomfort.

Rendering 8K at 90fps is an enormous task. How long did this actually take to get out of the machine?

This workload is far beyond what a typical workstation can handle. We used RNDR (OTOY’s cloud-based GPU farm) to render these massive shots without compromise. Moving to 8K increases render cost by about four times. To help manage this, we used AI-assisted frame interpolation to convert 24fps to 48fps, which significantly reduced total render time while keeping the motion smooth.

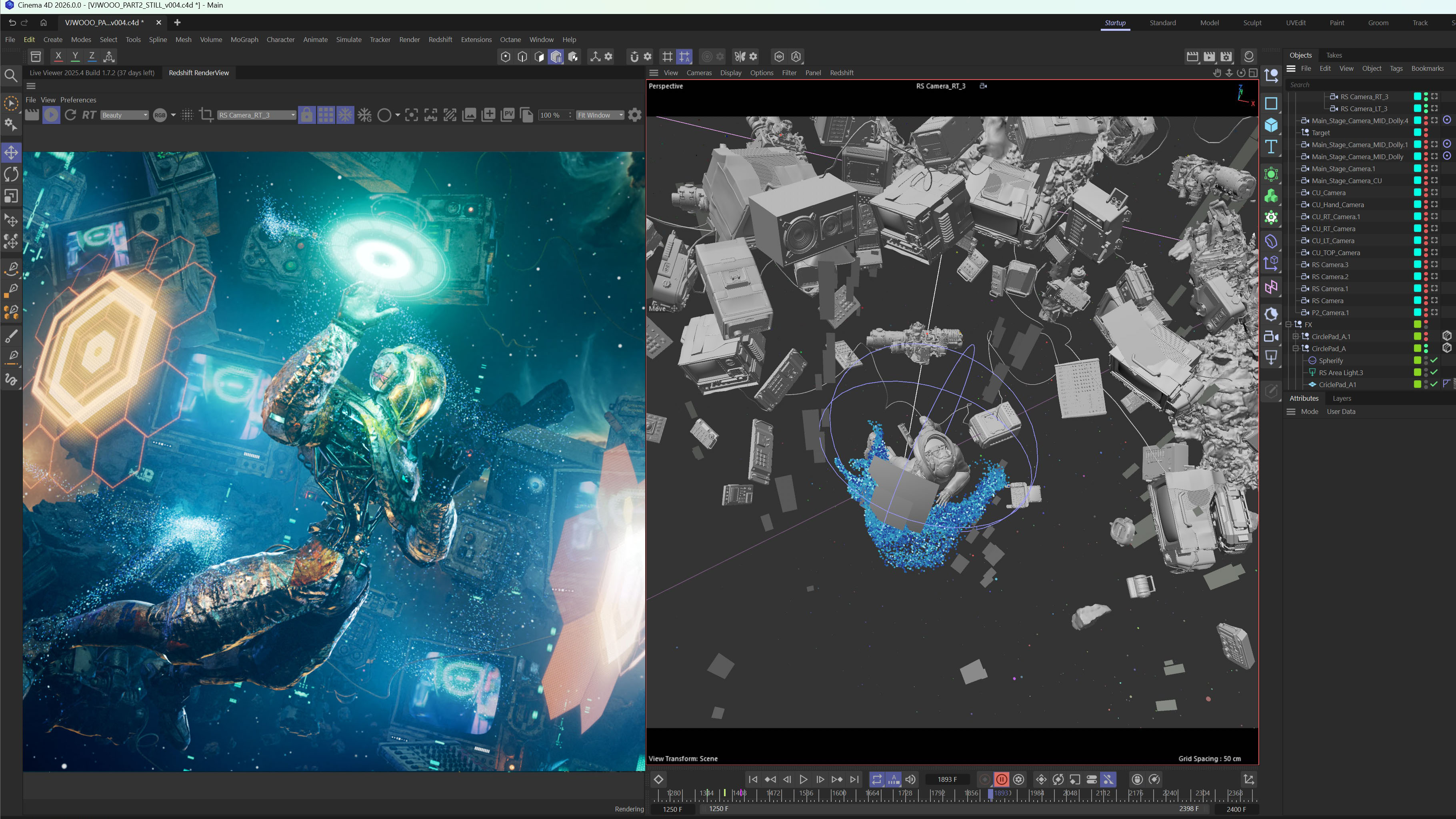

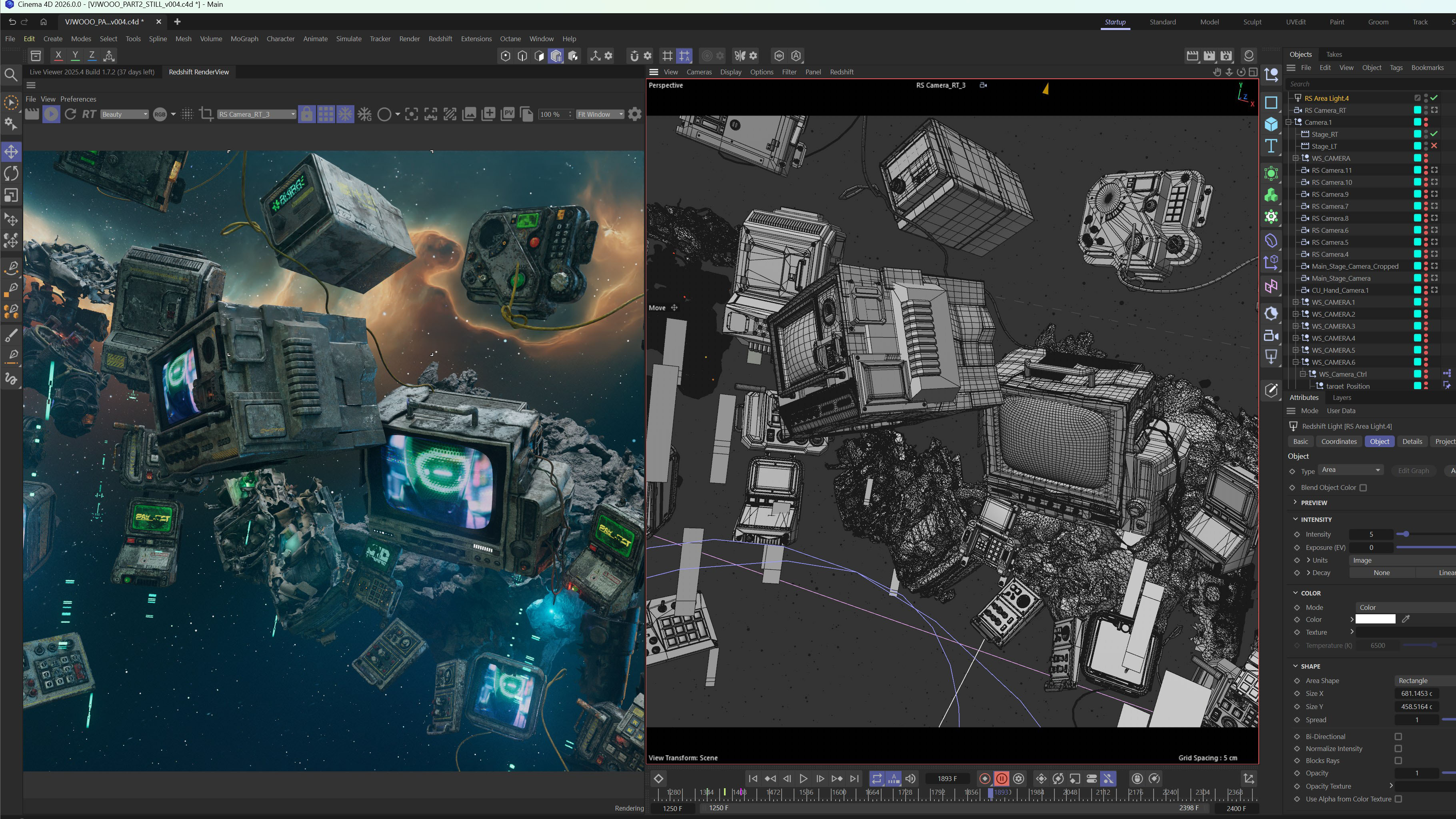

VJ WOOO, Nebula Junkyard has this incredible ”worn-down space equipment” vibe. How did you handle the "human scale" of all that floating junk?

The project actually started with assets I had originally built for a sci-fi short. Modeling and kitbashing these custom pieces was a critical part of the process. I approached the design by stepping into the shoes of the virtual character, imagining exactly what kind of equipment I would personally gather to build my own stage in that world.

Traditional CG animation often uses shaky cams and fast movements. Did you have to invent any new rules for moving the camera in Nebula Junkyard?

In most immersive projects, we are constrained by the viewer’s innate sense of gravity. However, since this story exists in a zero-gravity environment, I had much more freedom. I was able to implement bolder, more experimental camera movements that lean into that weightlessness, heightening the sensation of floating in deep space.

For the artists reading this: if someone has a 3D character or a world they love in Blender today, what is the very first thing they should do to move toward an immersive release?

Gryun: Immersive content is not just about watching—it’s about staging a virtual world. Scale, camera movement, and lighting all need to be treated as if you’re building a physical set. Because render times are so long, rely heavily on still images for validation early on. Catch issues before you hit “render”.

VJ WOOO: It is essential to experiment with the relationship between various IPDs (Interpupillary Distance) and the distance between the camera and your objects. You need to see how your work feels in a true spatial context. It’s like comparing the world seen through the eyes of an ant as in ”Ant-Man” versus that of a giant; the same object tells a completely different story depending on that perspective.

See the films: Nebula Junkyard and WAI-000 are available for free in Theater on Apple Vision Pro.

Curated by Sandwich Vision • Newsletter 006

Behind the Scenes: Synthetic AIV Workflow

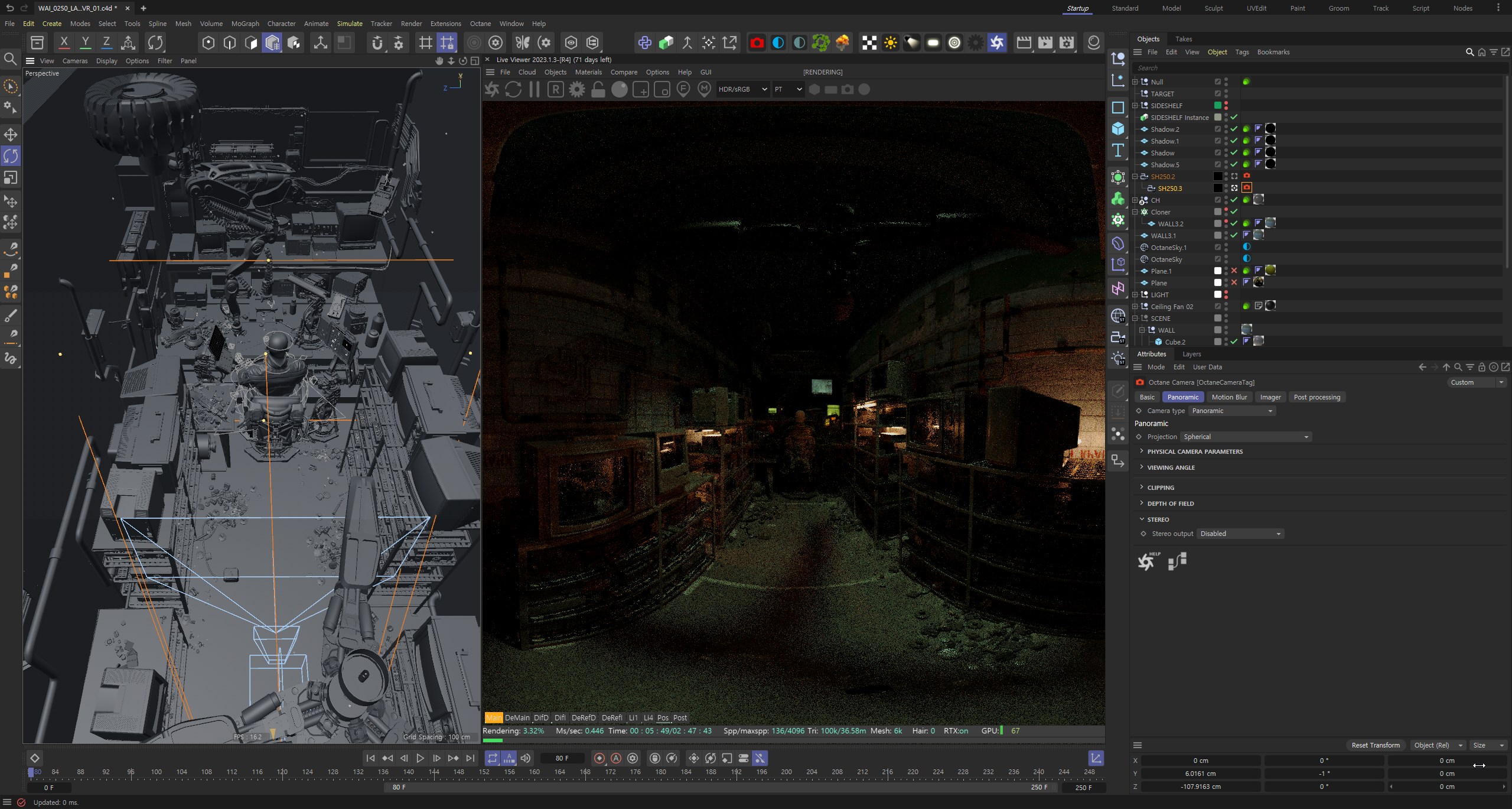

The stereoscopic 180-degree camera rig and field of view indicators used to define the immersive perspective.

A viewport comparison showing the environment scaled up around the camera to achieve macro detail without proximity discomfort.

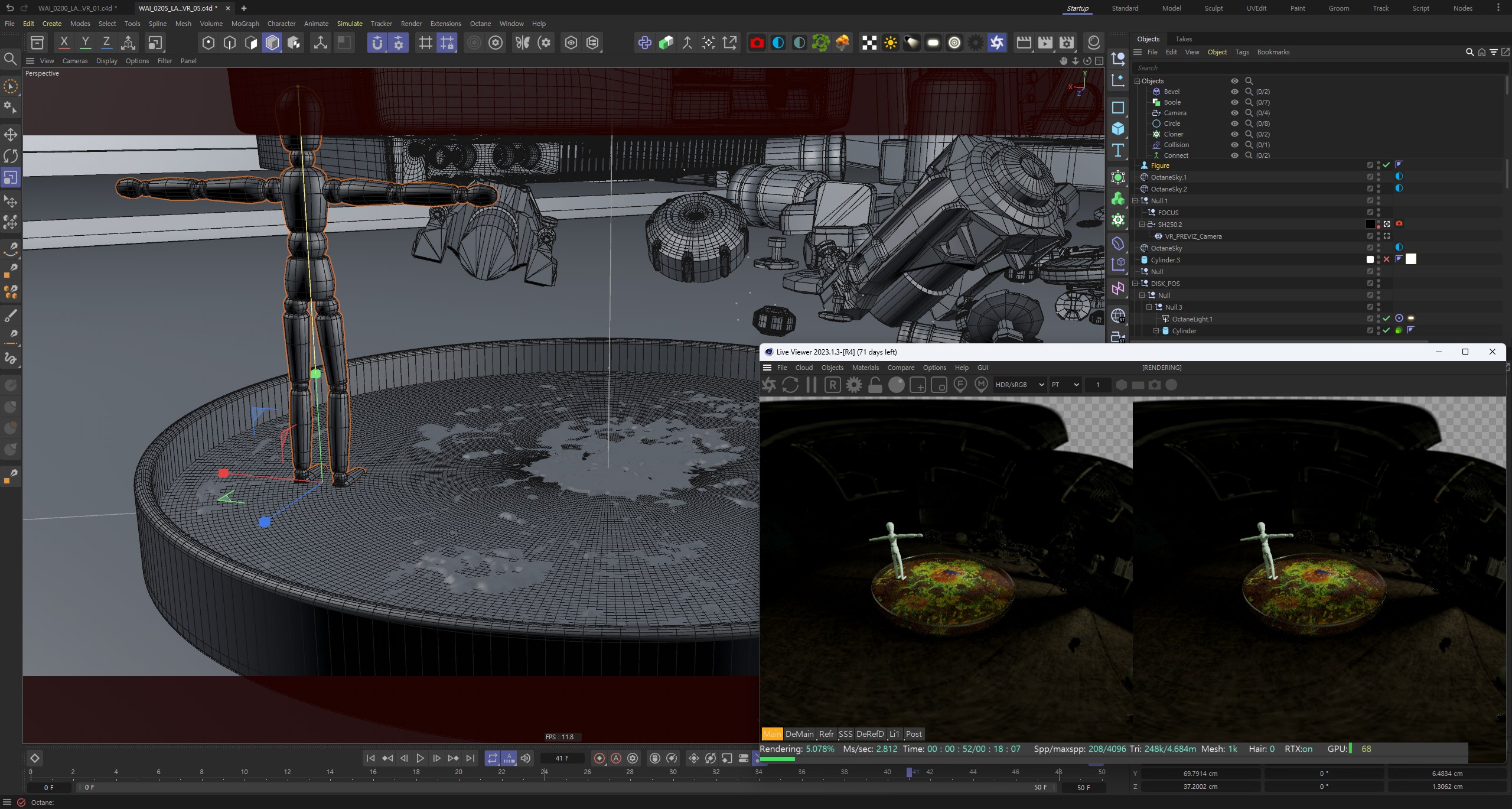

The spatial arrangement of the robot and floating instruments within the 180-degree virtual set.

High-fidelity texture and mesh detailing on the space assets designed for 8K per-eye resolution.